Views Materialization with DBT + Rockset

A demo where we walk through how to setup the dbt-Rockset Adapter and transform streaming data in real-time.

Show Notes

Nadine Farah:

Hi everyone. My name is Nadine and I'm from the Rockset team. Today I'm going to show you how you can transform data in real time using Rockset views and DBT. This is an example of streaming ELT, where you hit DBT run once, and your queries will reflect the latest data.

Nadine Farah:

For those of you who are new to DBT, DBT is an immensely popular open source data transformation tool. That's because in order to execute transformations, users need to find logic in SQL using select statements, and DBT will handle the DDL, DML in order of execution. Rockset is a real time analytics database that can ingest real time data and query it with sub-second latency. So let's get started.

Nadine Farah:

So the first thing we're going to do is we're going to make sure we're in our Python environment. It will look something like this. Then we're going to source it. Okay. So now that we're in an environment, we can PIP install DBT. I already have it installed just so that we can make this demo go a little faster.

Nadine Farah:

So the next thing we're going to do, is we're going to check our install with the version with DBT dash dash version. Now we're going to install the Rockset DBT adapter with PyPI. From here, we're going to create a DBT project called Rockset testing. So we're just creating our project right now, and it's created.

Nadine Farah:

I already have it opened here, and if I navigate towards testing dash Rockset and under models, you're going to see there are two models already created. One is called my first DBT model, and the second one is called my second DBT model. So these are the very files that we'll be changing as we write our SQL queries.

Nadine Farah:

So I'm going to go ahead and navigate back to my iTerm. So I just went ahead and cleared my screen. The next thing we want to do is we want to create a profile at code slash dot DBT slash profile dot YML. If it's not already there, you can go ahead and create one. It should look something like this. The two main things we want to do is we want to put in our Rockset API key and our Rockset workspace. So I'm going to go ahead and fill this in right now, before I continue.

Nadine Farah:

So now that we created our new DBT profile, we'll need to connect our DBT project with our new Rockset profile. Immediately under the project directory you'll see a file called DBT_project.yema, which is this file over here. Added the line in the file where it says profile, and you're going to see this default. You're going to change default to Rockset.

Nadine Farah:

So now I'm going to CD to testing Rockset, and now I'm going to head DBT run.

Nadine Farah:

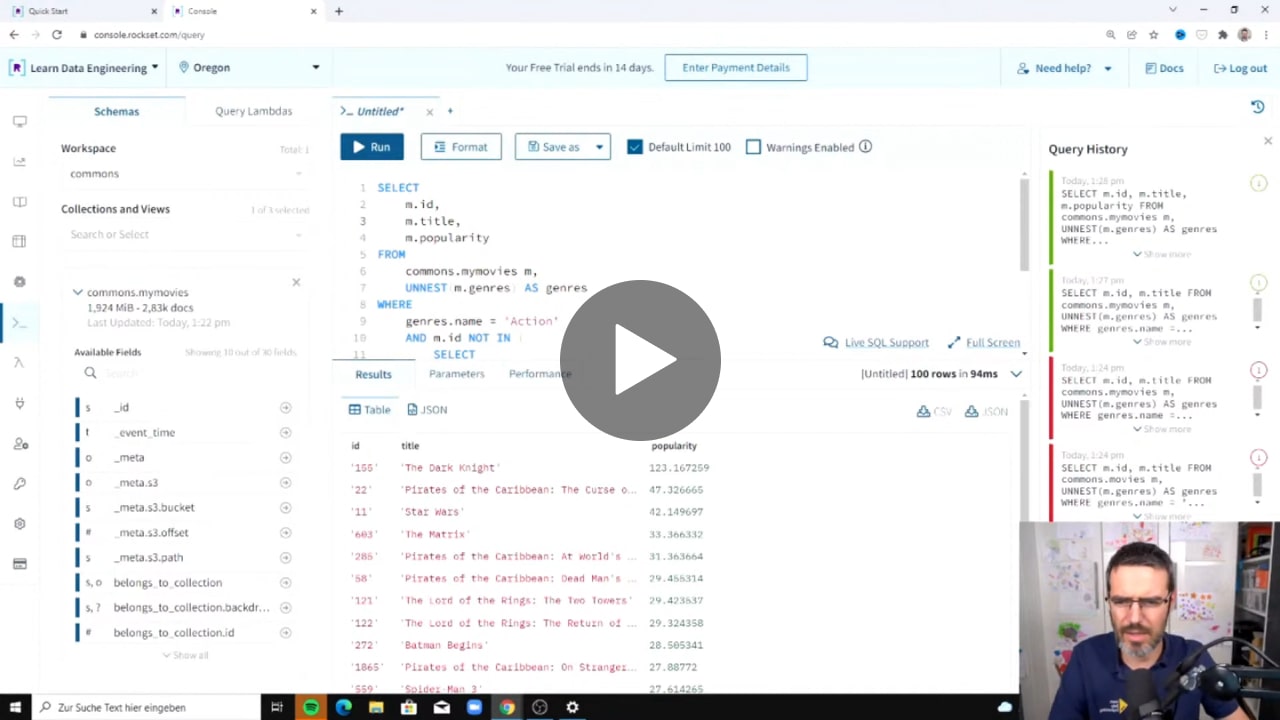

So now that this has finished running, let's go ahead and check this out at the Rockset console. I'm going to navigate to the Rockset console, and I'm going to go under overviews, collections, and if I go under views, you're going to see that there's my second DBT model. Now in my profile, I need my workspace DBT. This is why it's showing up DBT, but whatever you name your workspace, you'll find it. So I'm going to go ahead and click on this. You can already see what the SQL query looks like. This comes preceded when you installed the DBT project. Then I'm going to go ahead and open this up in the editor. Now, when I run it, you can see that I get my data back.

Nadine Farah:

So this is great. This is our hello world with DBT and Rockset. So switching gears now, what if we wanted to work with streaming data like Amazon Kinesis or Apache Kafka. First you'll need to set up an integration. An integration will look something like this. You're going to head to the integrations tab, and you're going to hit, ad integration. You can pick any streaming data that you want to work with, either Apache Kafka or Amazon Kinesis. We're going to be doing Amazon Kinesis today, so I'm going to go ahead and click on this data connector.

Nadine Farah:

And I'm going to go ahead and click start. So what I'll have to do is I'll have to fill out the integration name. This is for Rockset purpose only, and then I'll have to go to the IM service on Amazon and fill out the policy and then configure my AWS IM role, which is this information over here. So when you do a AWS cross account role, you'll have to put in your Rockset account ID and your external ID. Then once you create your role, you'll get a roll errand from Amazon, and you'll just type it in over here. You'll just copy it and paste it over here, and that's it. Then you'll save your integration. I already have something already set up, so we're going to use that. So, after you've created your integration. You're going to go ahead and create a collection from your integration.

Nadine Farah:

So I've named my collection, "Twitter Fire Hose DBT," and I already gave it my integration name, which is called "Rockset Demo Integration Kinesis." This is the integration we just created, and then you're going to give it your Kinesis stream name. This is something that you set up on AWS. So it's whatever name you put in when you created your Kinesis stream on AWS. Then you're going to pick the region that you created your stream in. So you're going to go ahead and put that there, and you're going to put your format with just JSON. So once you've done that, you'll see what a source preview looks like, and if this looks all great and dandy, you're going to go ahead and create a collection from this integration. So this is what I'm going to do right now. So now it's ready. So go ahead and query this collection, and you're going to see that when we query it, you can see that the data already exists. So this is great, but what does this look like for DBT? So now that we have our collection name, we can start creating transformations on our DBT. So I'm going to go ahead and navigate towards our project for DBT.

Nadine Farah:

Which will look something like this. So we have our first model and we have our second model. So I'm just going to go ahead and rearrange this really quickly. I'm going to go ahead and share some queries that I will write. In this transformation we are unnesting or flattening the NGD field, so we can extract the ticker symbol. The entities field looks something like this. You can see that it's an array of objects, and when we unnest it, we are able to create the structure. In our second transformation we are joining data with our first DBT model with data from S3. We want to see the most popular tweeted, ticker symbols with the company information. So now if we run it, and it's done. We now have a real time streaming ELT setup with Rockset views and DBT. If we navigate back to the console. So now if we go to overviews, collections and we go to the views tab, we can see that we have my second DBT model, which is the latest transformation that we did. If I open this up in the editor and I just delete all of this, and I just put select star for my second DBT model, and I run it, we get our data back.

Nadine Farah:

Rockset automatically keeps in sync with data from Kinesis, with a data latency with five seconds or less, as we can see using the adapter, you can load data into Rockset and create collections by writing SQL select statements in DBT. As shown in this walkthrough, these collections can then be built on top of one another to support highly complex data transformation with many dependency stages. The DBT Rockset adapters fully open source, and we would love to get your input and feedback. If you're interested in getting in touch with us, you can sign up here with a link down below, to join our beta partner program for the DBT Rockset adapter.

Recommended Videos

See Rockset in action

Real-time analytics at lightning speed